It’s important to Booking.com that their employees have a full understanding of how they are performing in their roles. To that end, an internal team was established to help encourage more transparency between employees and managers so that employees would better understand exactly how they were doing, what they could do to improve, and reduce surprises when it comes to assigning performance scores and bonuses.

I was Senior UX Designer on this team and led 2 other designers as we researched, designed, and iterated on a tool for employees to easily set and track objectives, record meetings with their manager, and complete regular self evaluations.

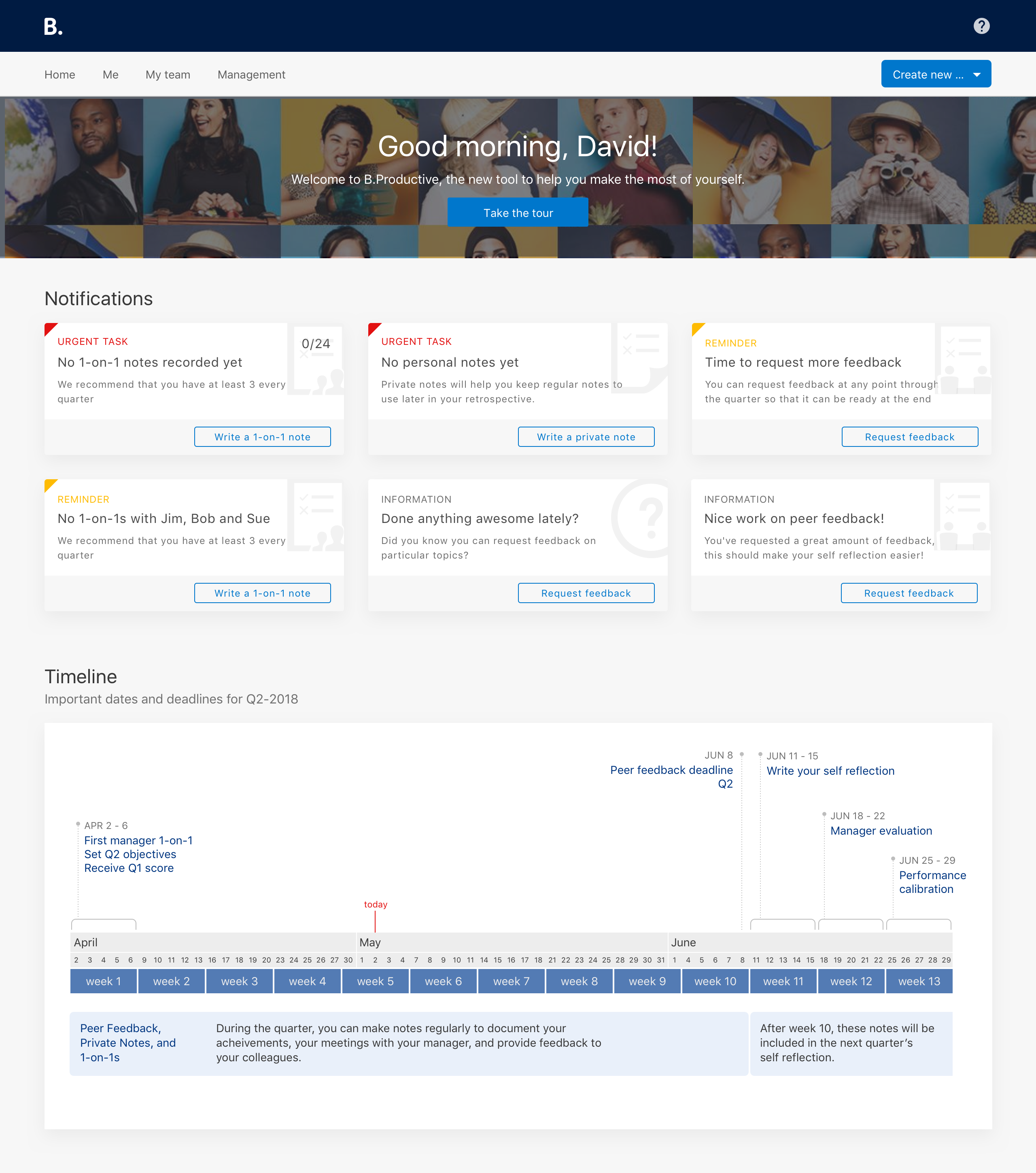

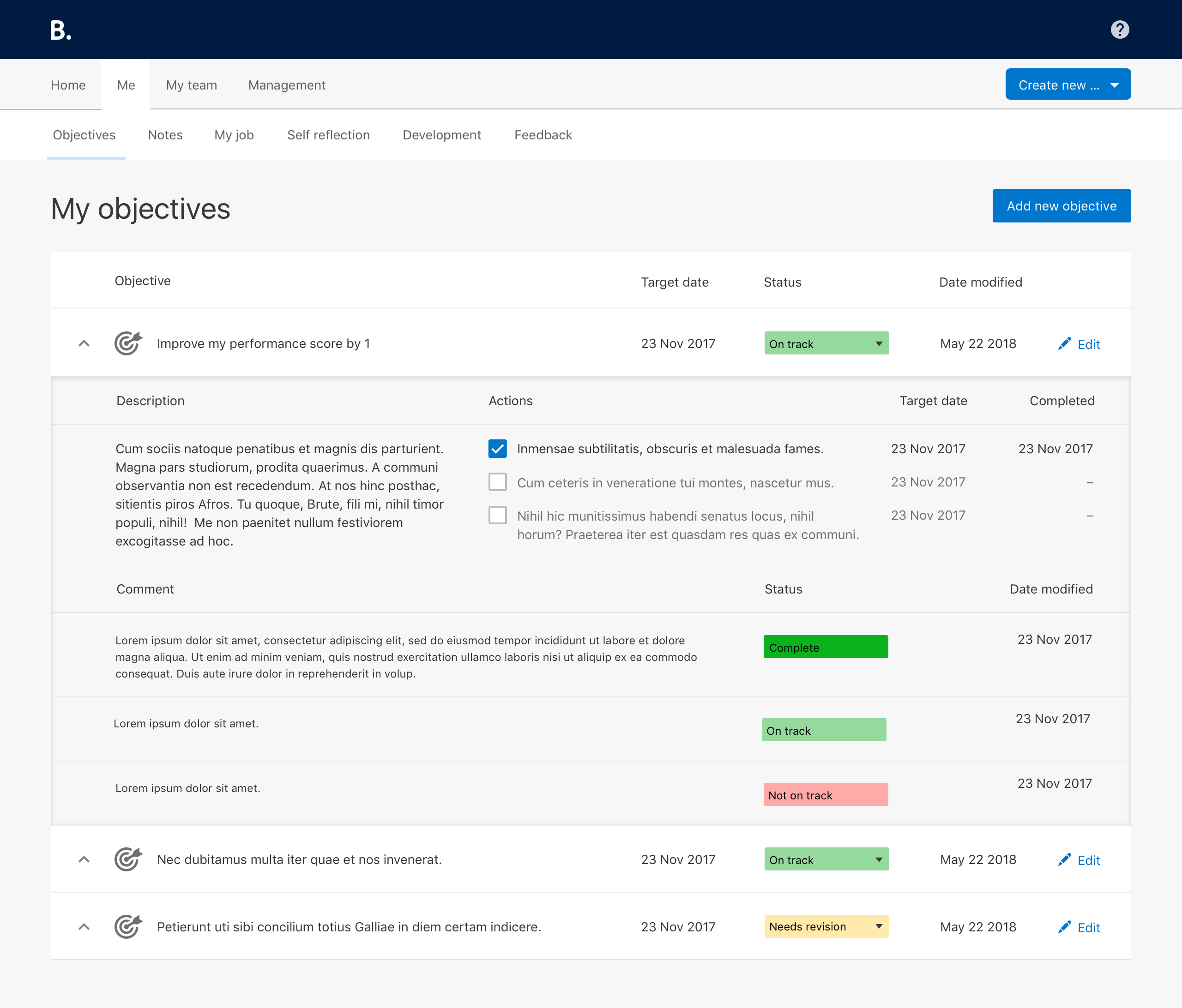

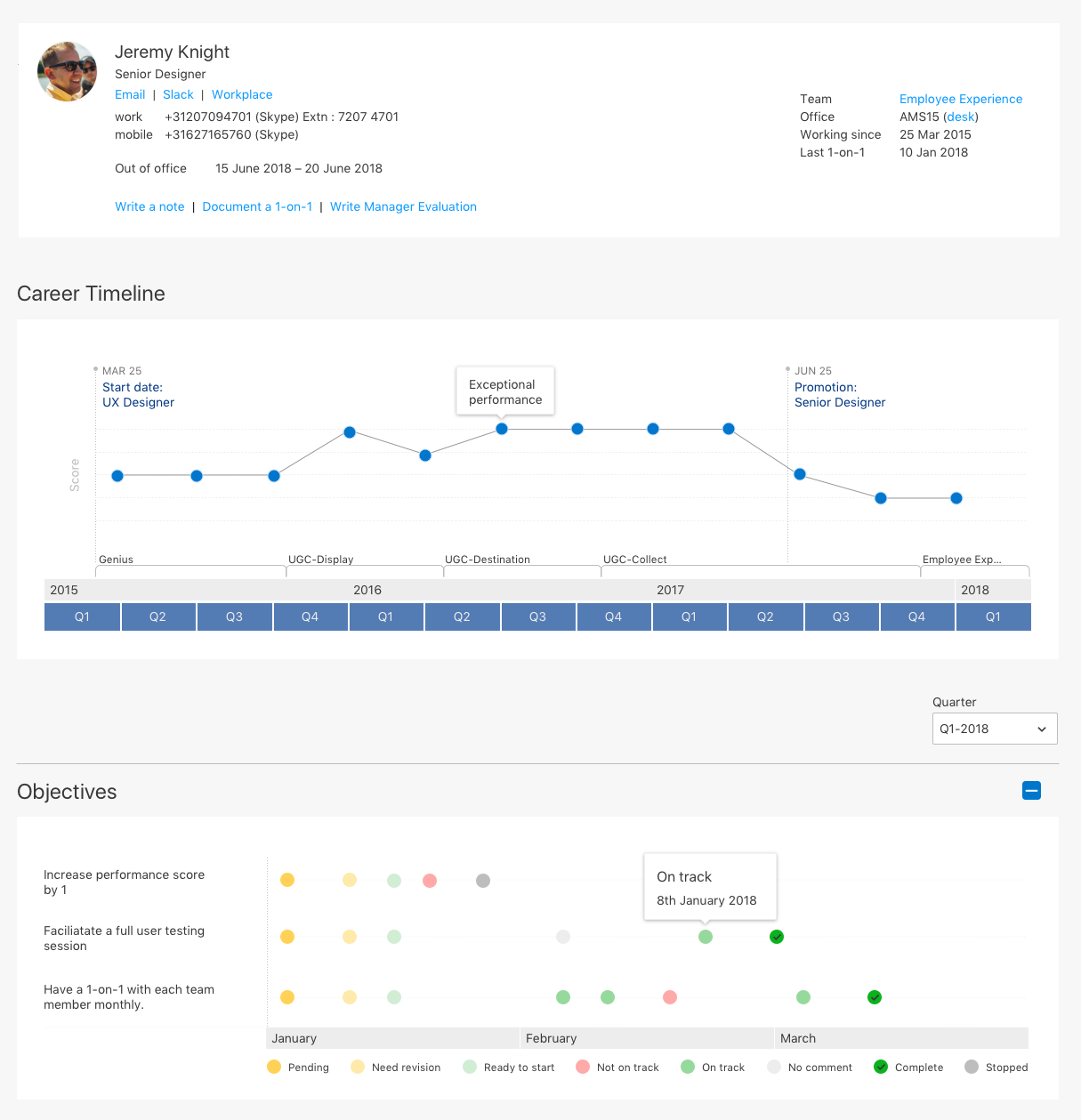

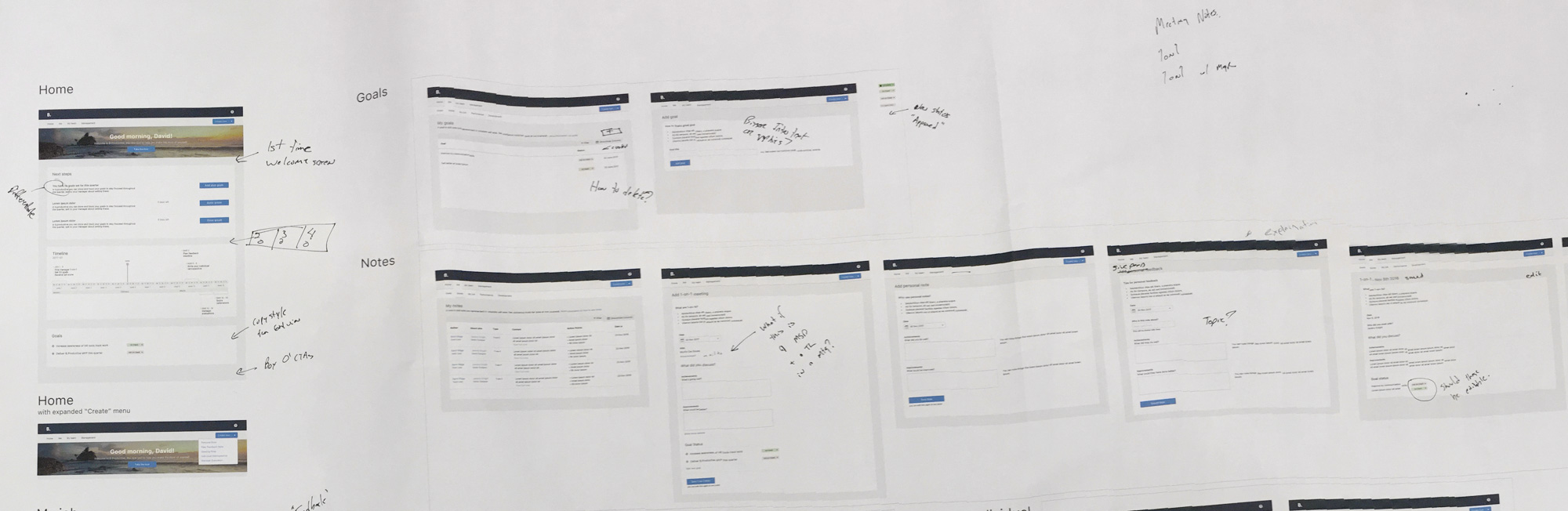

Below is a sample of some of the designs and following that is a more in-depth story of how we approached this project.

Research

Before we could begin to design a solution for the issue of employee performance, we needed to fully understand the problem. To do so, we travelled to many different Booking.com offices around Europe and talked to a wide range of employee roles and their managers. We asked employees questions like:

- Do you understand what is expected of you in your role?

- Do you know what you need to do to be promoted or receive a good bonus?

- How often does your manager meet with you one-on-one?

- Are these meetings helping you to develop professionally?

- How do you feel about the current performance management tools?

- Do you and your manager set objectives or performance plans together?

- How do you feel about the current peer feedback process?

After many interviews, we started to hone in on some central issues we needed to solve.

- Employees lacked a clear understanding of what was expected of them.

- As a result, performance scores and bonus discussions based on these expectations often led to unwelcome surprises for employees.

- Managers and employees were meeting regularly one-on-one, but the cadence varied widely as did the content. Some were merely status update meetings and were not helping employees to grow.

- The quarterly deadlines associated with the performance process (for things like peer feedback, self evaluations, and manager evaluations) were hard to keep track of and often missed.

- Peer feedback was being used in some departments, but employees were only allowed to provide peer feedback in a short time window at the end of the quarter.

- Overall, performance management tasks took too much time and employees felt overwhelmed by the process.

Potential solutions

Now that we had a grasp on the problems and their scope, we needed to find ways to solve them. Using the help of subject matter experts and competitive research we discovered that the concept of goal setting was a potentially good way of getting employees and managers to be aligned in their expectations. Unfortunately there is no clear guideline about how often managers should meet one-on-one with their employees, but we realized that if we can at least track the frequency across all roles and offices we can perform data analysis later to determine what is working best. While we are tracking these meetings, we can also track the status of the employees’ objectives, and provide employees and managers with a way to document what was discussed. So in short, our solution needed to have a few key elements working together:

- Objective setting

- One-on-one meeting tracking

(with the ability to update goals and document topics discussed) - A better way to give and receive peer feedback.

- A timeline of important dates for the quarter.

Designing and testing a prototype

With a rough outline of our key elements we needed, it was time to create a prototype and test with our users. We followed the Booking.com design system faithfully and provided feedback to the system designers when encountered issues.

Our initial MVP prototype solved the core problems we identified in research in a handful of simple pages:

- A home page where users could see a timeline of deadlines for the quarter and also a contextual notification area where we could let users know what things they should be working on right now.

- An objectives page where users could create and view objectives for this quarter.

- A peer feedback area where users could request and give feedback to their peers anytime throughout the quarter.

- An area for employees and managers to document one-on-one meetings. Either party could document what was discussed and managers could change the status of any of the employee’s objectives.

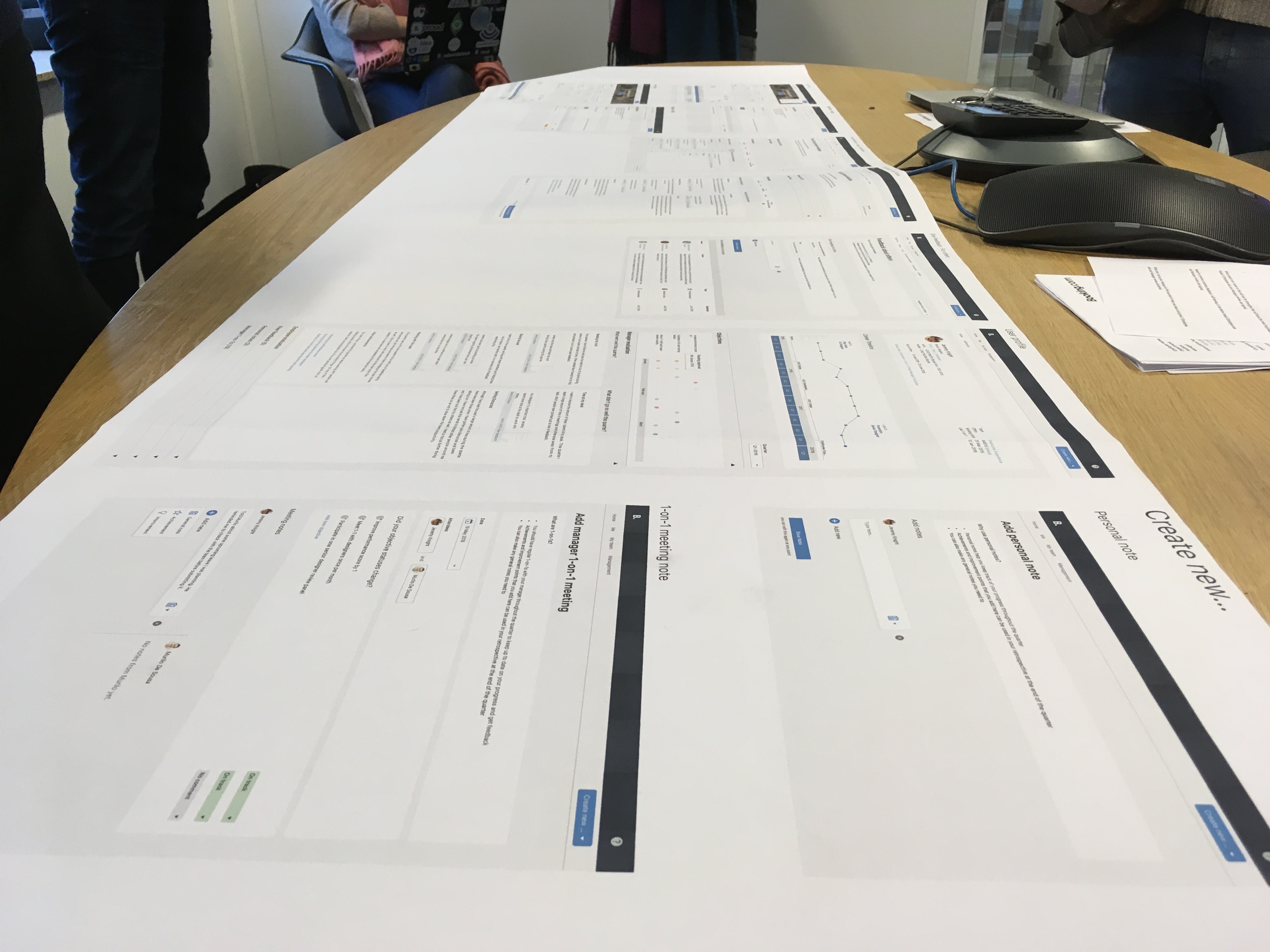

After we had rough mockups of these features, we printed them up on large rolls of paper and brought them to our users. Luckily our users were our fellow employees so it made it quite easy to round up a few of our colleagues into a conference room and get immediate feedback which we could iterate on.

The large format print outs made it easy to capture notes and feedback directly on to the paper so we could bring them back to our desks to make changes. Before printing out a new version, we checked each handwritten note on the previous version to make sure we had addressed it in our new designs.

Working in small steps and validating our hypotheses early with real users helped increase our chances of releasing a successful product that solves real user problems.

From our printed prototype, we moved to a digital prototype using Invision to create a series of clickable screens that would give the user a sense of the functionality of the finished product. After a few more rounds of user feedback on our Invision prototypes it was time to start building.

Building the MVP

After several iterations of great feedback from our colleagues we were ready to the design and begin building the Minimum Viable Product (MVP). Our developers had been part of this process from the beginning and had a full understanding of the problems we were trying solve and they had already begun working on a backend and front-end framework. We as designers continued working closely with the developers, adjusting designs as needed to make the development smoother or to address new issues.

Launch

It was important that we not launch our product all at once to the whole company, so we determined early on that we would select a small subset of employees, which we called our “pilot group”, to launch to. They would use the product for 3 months, and we would monitor feedback channels and key metrics to see how it was working. Our product team did a lot of prep work in determining which segment of employees would be the best to trial the product. The group we chose was:

- roughly 150 employees

- located in Amsterdam near our team

- in teams that worked closely together

- in departments that did not have complex performance requirements in place

At the start of Q2-2018, we launched to our pilot group, began monitoring our feedback channels and meeting regularly with users to see how it was going.

Metrics for success

One of the interesting challenges of this project was how to know if we were successful. What data would we look at to know that our tool was solving the right problems and encouraging the behavior that we wanted? We realized early on that the real measure of success would be things like:

- increased employee satisfaction

- a decrease of “surprises” around performance scores and bonuses

- increased employee engagement

- increased employee understanding of their responsibities

These are the real results that would help Booking.com grow, but they would be impossible to measure in only 3 months with a group of 150 people. These were long term goals that would need several quarters of data in order to truly be validated. However, none of this data would even be available in the future if people didn’t use the tool regularly by setting objectives, documenting one-on-one meetings, and providing peer feedback within the tool. In order to determine if usage of our tool was increasing something like employee satisfaction, we first needed people to actually use the tool. With that in mind, we set our short-term metrics for success around usage. How many people were setting objectives? How many were providing feedback to their peers? How many one-on-one meetings were recorded? Once we had seen gains in these usage metrics we would know that we had built a foundation to measure the greater goals in the future.

Conclusion & learnings

I happy to say, the launch was successful and we saw great usage data and feedback from our pilot group. This was a great project to learn about user behavior because we had direct access to our users. A few things we learned:

- Peer feedback usage

Some features (like peer feedback) were not used exactly how we had imagined. We had designed the tool for people to provide short and frequent bits of feedback but at Booking.com people had become very accustomed to providing feedback in one large chunk at the end of each quarter. We talked to our users about that and designed improvements for the next phase to make clearer the benefit of our new approach. - Deadline changes

One thing we wanted to change with the tool is the deadlines associated with the quarterly performance process. Deadlines for peer feedback, self evaluations, and manager evaluations typically spilled into the next quarter. We wanted to fix that with the tool, so we moved those deadlines up so that they were all contained within the quarter. Unfortunately, because some employees were in the pilot group (new deadlines) and some were not (old deadlines) it created an unexpected headache of coordinating feedback between the pilot group and the non-pilot group. In short, we tried to change too much at once.

These learnings were used to improve on the project post-launch as the team iterated on our MVP throughout the following quarters.

Thanks very much for reading about this project. If you have any questions or want to talk further about it, please contact me or feel free to browse more screenshots of this project here:

[ngg_images source=”galleries” container_ids=”1″ display_type=”photocrati-nextgen_basic_thumbnails” override_thumbnail_settings=”1″ thumbnail_width=”220″ thumbnail_height=”160″ thumbnail_crop=”1″ images_per_page=”20″ number_of_columns=”0″ ajax_pagination=”0″ show_all_in_lightbox=”0″ use_imagebrowser_effect=”0″ show_slideshow_link=”0″ order_by=”sortorder” order_direction=”ASC” returns=”included” maximum_entity_count=”500″]